So after flirting with the idea of buying a MacBook Pro for months, I went with Windows.

But I went with Windows in style.

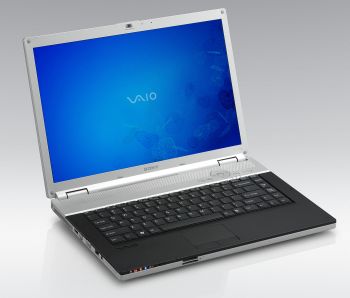

A few days ago, I purchased a brand new Sony VGN-FZ140E notebook computer from the local Circuit City. (Here’s the laptop homepage on Sony’s website.) Circuit City had a deal which was pretty hard to pass up. For the incurably geeky, here are the specs on my new computool:

Intel Core 2 Duo T7100 processor running at 1.8 GHz

Intel Core 2 Duo T7100 processor running at 1.8 GHz- 15.4-inch widescreen WXGA LCD with reflective coating

- Intel Graphics Media Accelerator X3100

- 200 GB hard drive (only runs at 4500 RPM, unfortunately)

- 2 GB of memory

- Built-in wireless connectivity to 802.11a/b/g, and even n

- Built-in webcam and microphone

- DVD-/+RW drive, which I think has that cool LightScribe labeling thing

- Slots ‘n jacks ‘n ports up the wazoo

- Only 5.75 pounds, including battery

- Windows Vista Home Premium

So why no MacBook Pro? It’s simple: the display for the regular ol’ MacBook is too frickin’ small, and the base model for the MacBook Pro is $2,000 before sales tax and shipping. What did I pay for my Sony? A nice, light $1,200 including sales tax.

And I have to say that this Sony almost matches that Apple cool factor. It’s extremely thin and light, and has this graphite coating that just begs to be caressed. The display is absolutely gorgeous, the brightest and clearest I’ve ever seen. So far, the machine’s been as quiet as a church mouse, it doesn’t heat up unnecessarily during normal use, and the Vista Aero graphics are pretty snappy. I’m not quite used to the keyboard layout yet, but the action is phenomenal — the keys are almost flat, like the MacBook’s, and they don’t clatter loud enough to wake the neighbors.

All in all, this should be powerful enough to do what I intend to do on this laptop. Which is plunk my ass down in a series of Starbucks and write Geosynchron, the third book in the Jump 225 Trilogy. There will be the occasional bit of web contract work on here, but again, I mostly reserve that for my desktop.

I’d gotten used to all kinds of inconveniences with my 2003 vintage Toshiba notebook. The lid doesn’t open and close properly, hibernation doesn’t work, there’s no built-in WiFi, and the thing vents out the bottom, so if you stick it on a cushioned surface it overheats and shuts down. Almost any new laptop I buy would solve those problems, but the Sony VAIO solved problems I didn’t realize I had. Like the fact that all of the ports are exactly where I want them to be, and the power jack includes an L-shaped connector that makes the cord take up less space.

So what are the immediate downsides I see to this machine?

- The trackpad is a bit smaller than usual, and it’s almost completely flush with the rest of the casing. Seriously, it’s only recessed about a millimeter. This means that half the time I have to slide my finger around for a second or two to actually find the trackpad. It doesn’t help that the trackpad is black with black buttons, so it’s almost completely camouflaged. In low-light situations, you can barely even tell it’s there.

- The sound is a lot tinnier than I expected. I probably should have gone for the model with the fancy-schmancy Harman-Kardon speakers, but I suppose it’s not really that big of a deal. I listen to most of my music on the desktop anyway, and if I’m going to watch DVDs I’ll be using headphones.

- No Bluetooth. Which isn’t a tragedy for me, considering that I don’t really have any Bluetooth gadgets. But I was really hoping to start Bluetoothing my office so I can get rid of some of those wires. Guess I can always go buy an expansion card.

- The integrated video isn’t powerful enough to let me run advanced games, which probably won’t be too much of an issue considering I do the little gaming I do on the desktop PC.

[W]hether or not such a novel could be considered “hard science fiction”… might be moot if Edelman himself were just blowing rubber science smoke and mirrors. Instead, he is actually trying to make bio/logics and MultiReal seem scientifically credible in the manner of a hard science fiction writer and doing a pretty good job of it, at least when it comes to bio/logics.

[W]hether or not such a novel could be considered “hard science fiction”… might be moot if Edelman himself were just blowing rubber science smoke and mirrors. Instead, he is actually trying to make bio/logics and MultiReal seem scientifically credible in the manner of a hard science fiction writer and doing a pretty good job of it, at least when it comes to bio/logics. How would you build such a thing? You might think that Google’s programmers would try to break down languages into sophisticated formulae depicting sentence structure and grammatical rules and that kind of thing. Wrong. If I’m reading the article correctly, Google’s essentially trying to do the whole thing through pattern recognition.

How would you build such a thing? You might think that Google’s programmers would try to break down languages into sophisticated formulae depicting sentence structure and grammatical rules and that kind of thing. Wrong. If I’m reading the article correctly, Google’s essentially trying to do the whole thing through pattern recognition. That’s kind of how I feel trying to learn

That’s kind of how I feel trying to learn

And why have I never suffered from a virus outbreak? Because, despite what you see and hear all over the Internet and the media, the security problems of Windows are vastly overblown. Windows is and has always been a fairly secure operating system, if you know what you’re doing.

And why have I never suffered from a virus outbreak? Because, despite what you see and hear all over the Internet and the media, the security problems of Windows are vastly overblown. Windows is and has always been a fairly secure operating system, if you know what you’re doing.