As part of the research for my next book, MultiReal, I’ve been thinking a lot about mind uploading.

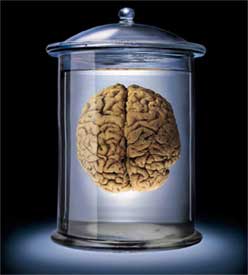

Mind uploading is a transhumanist concept wherein you take a human brain and digitize it. We’re not just talking about scanning and mapping here; the goal is to have a fully functioning mind that can exist outside of all this defective muscle, bone, and tissue you cart around with you. Science fiction authors have been kicking the idea around forever. Wikipedia cites Philip K. Dick and Roger Zelazny as some of the earliest SFnal treatments of mind uploading, but you could make a good argument that Mary Shelley got there first with her Frankenstein, or the Modern Prometheus in 1818.

Mind uploading is a transhumanist concept wherein you take a human brain and digitize it. We’re not just talking about scanning and mapping here; the goal is to have a fully functioning mind that can exist outside of all this defective muscle, bone, and tissue you cart around with you. Science fiction authors have been kicking the idea around forever. Wikipedia cites Philip K. Dick and Roger Zelazny as some of the earliest SFnal treatments of mind uploading, but you could make a good argument that Mary Shelley got there first with her Frankenstein, or the Modern Prometheus in 1818.

In theory, mind uploading is a pathway to immortality, and there are real organizations of thinkers, philosophers, and scientists working to make it happen. I’m betting that not only will it happen, but that it’s possible that the writers are going to get there first.

Let me back up.

Suppose you manage to “digitize” the human brain — whatever that means — and store the whole thing on a massive supercomputer. You run the program, virtual neurons start firing. How do you know it’s actually working? How can you tell that you’ve got an actual mind and not just a random collection of hopped-up virtual nerves?

Naturally you’d use the Turing Test. The Turing Test, created by visionary Alan Turing in 1950, says that if a machine can successfully fool other humans into believing it’s an intelligent entity, then for all practical purposes it is. So if we plug your spouse in to that supercomputer, have her talk to your uploaded mind, and she can’t tell whether she was talking to the flesh-and-bone you or the bits-and-bytes you, we’ve succeeded.

(Now there have been lots of objections raised to Alan Turing’s hypothesis. Some of them are of the predictable, nonsensical, religious variety, but some of them do seem legit. The Wikipedia article on the Turing Test spells them out quite nicely. My experience with cognitive science is limited to a semester in college, reading books by Ray Kurzweil and Rudy Rucker, six years of therapy, and futzing around in Wikipedia, so take my scientific opinions here with a gargantuan pillar of salt. But it seems to me that if you could put a digital brain and a meat brain in the same situation and they both make identical choices, you’ve succeeded in mind uploading.)

So the bar to clear in order to declare ourselves successfully uploaded isn’t as high as you might initially think. We need a program that can successfully imitate everything you do and convince anyone on the planet it’s the real thing. Once we had that program, we could then theoretically rebuild your mind, back it up, even transfer it into the body of a super-soldier a la John Scalzi’s Old Man’s War. (Although only Scalzi can make them wisecrack so).

We probably don’t need to map out every single one of the umpty-ump trillion molecules in the human brain to do it. We can take mathematical shortcuts. We can eliminate a lot of the redundancy and vestigial functionality in the human brain that we don’t use or don’t need. (Would you really be less of an intelligent entity if we could smooth out the neurological wrinkles that cause deja vu, for instance?)

In short, we treat the human brain like the ultimate black box. We know what the desired outcome is — a program that acts just like you do — and we don’t really care how we get there.

So how would you create such a program?

Again, I’m no cognitive scientist (see caveat above). But presumably you could create such a program through pattern recognition. Feed some analytical computer gajillions and bazillions of samples of your thought processes, and let the computer sniff out the patterns and logistical rules. Eventually, if you provide this computer with enough data points — thoughts — it should be able to create a simulation that performs identically to your real brain. The more you input, the greater the precision.

Name me a class of people who routinely record their thought processes for a living.

Correct! Writers.

Looking at my own output, I’ve got a good head start. If you total the word counts for Infoquake and the current draft of MultiReal, you’ve got about 225,000 words. Throw in all of the reviews and interviews I published in the mid-’90s and all of the posts on this blog. The word count adds up.

Yes, I know what objection you’re going to make. Writing isn’t the same as thinking. The thoughts you put down on paper are only a selective filter of the things going on in your head. And this is true enough. What I’ve written about the adventures of Natch, Horvil, and Jara doesn’t tell you much of anything about my feelings and opinions. You could spend ten years studying Infoquake cover to cover and still have no idea whether I prefer white, wheat, or rye.

But what about biometrics?

But what about biometrics?

We’ve come up with all kinds of identifying biometrics that are increasingly in use every day. We know how to scan in your fingerprint, store it digitally, and then recreate that fingerprint from scratch. We’ve come up with all kinds of identifying biometrics from the shape of your irises to the way you walk to the rhythm that you type.

Is it so far-fetched to think that we could come up with a biometric for how you write? Only you write the exact sentences, phrases, and punctuation that you do. Of all the billions and trillions of word choices you could have possibly made, you chose this exact combination. Could there possibly be any more explicit record of your thought processes? Every comma, every period, every space is an indelible record of how your mind processed and analyzed that particular choice. Give the supercomputer access to your rough drafts — or better yet, record every minute of the composition process with video capture software and let the supercomputer analyze how you edit and revise — and you might be surprised at the patterns it could find.

Couldn’t our theoretical analytical supercomputer reverse engineer those thought patterns and recreate a Turing Test-functional simulation of the brain that came up with them?

Think of the mind of William Shakespeare as the ultimate black box, and his complete works as the data output from that black box. If we could feed all that data into our theoretical supercomputer and determine the neural patterns necessary to create such a body of work, we could, maybe, theoretically, some day, after lots of trial and error, recreate Shakespeare’s brain. (Or Adolf Hitler’s. We’ve still got Mein Kampf. They saved Hitler’s brain, indeed.)

Of course, this scenario is not without its problems. How can the pattern recognition software discern my words apart from the words my copy editor or proofreader sticks in the text? Take a writer like Shakespeare, whose works have been copied and recopied and edited and amended until we’re not even sure who wrote them anymore. We could end up with a brain that’s 90% William Shakespeare, 7% Anne Hathaway, 2% Francis Bacon, and 1% the proofreader at W.W. Norton. How’s that for an ethical quandary?

But I’m an optimist by nature. I’ve always doubted that we’d figure out a way to transfer the human mind into a gleaming metal android body before I kick the bucket. But perhaps, just perhaps, we already have a way to at least back up a human consciousness and recreate it in some theoretical future.

Now if the prospect of immortality ain’t motivation for me to finish MultiReal, I don’t know what is.